In my last experiment, I fine-tuned a custom Stable Diffusion 1.5 with tokens for my whole family using FastBen’s notebook on Google Colab Stable diffusion model. You can see the particulars of the training in my last post about training an AI image generator on four people.

Despite that I wasn’t successful in generating images with four distinct people for a specific purpose, I nonetheless decided to get some results from a subset of two tokens from the four tokens trained.

The concept that I wanted to execute was the idea of Meighen and me as famous couples. I had put together a list of iconic couples, and my goal was to come up with prompts and processes to get good images where our likenesses were substituted into the scene. One significant departure from my previous trials is that I have started incorporating negative prompts into the renderings.

Negative Prompts

Following a few weeks of thrashing about, I finally stumbled upon negative prompts in Stable Diffusion. Negative prompts are where you put what you don’t want to see in the image. You often see artifacts like mangled fingers, amputations, distorted faces, and weird prompts. There are a lot of negative prompts out there on the internet. Most negative prompts focus on eliminating duplicate images, extra fingers, and misshapen phases. I found one on Moritz’s Blog that seems to do a pretty good job of helping the AI Converge on an aesthetic image.

There are many more out there. One challenge of using negative prompts is that you may constrain the AI so much that you miss out on “happy accidents.” using your custom Stable Diffusion model. For example, what the weights say may be “bad Anatomy” or “bad proportions” may instead be just a stylized interpretation of your prompt. In this experiment, I erred on the side of extensive negative prompts because I was trying to get a good likeness of the two subjects. This test is about training two subject tokens simultaneously and then creating inferences that have both subjects in them.

Prompts for a custom Stable Diffusion Model Trained on Two Subjects

Baseline and Reference Prompts

- Plain vanilla baseline images of each token and both together.

- Select prompts from my previous experiments.

- Both tokens rendered into scenes of famous couples.

Famous Couples

- Anthony and Cleopatra

- Dr. Evil and Frau Farbissina in Austin Powers

- Satine and Christian in Moulin Rouge

- Anna and The King

- Morticia and Gomez Addams

- Walter and Hildy from His Girl Friday

- Bridget Jones and Mark Darcy from Bridget Jone’s Diary

- The Joker and Harley Quinn from

Burningman Prompts

Lastly, I did a series of prompts around Burningman, a venue with a distinctive visual style. One that I’m familiar with, and it solves a real-world challenge for me -Coming up with a new look every year for the trip out to the desert.

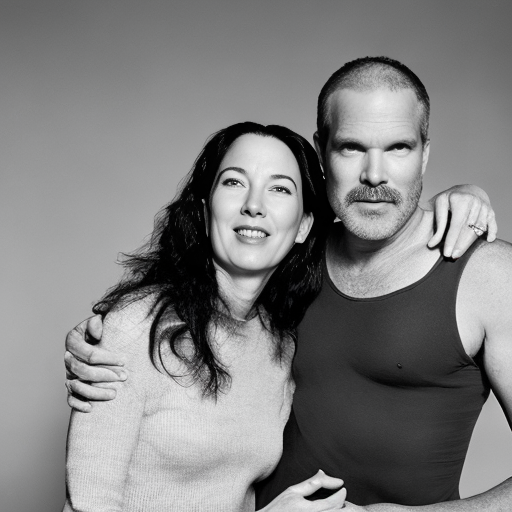

Baseline prompts

First, I wanted to see what was possible with plain vanilla.

Not in a promising start. The renderings of what it’s supposed to be my likeness don’t capture my facial features that well. It rounds the cheeks and smooths the skin and makes me much chunkier than any of the instance images that I trained the model on would suggest.

The initial renderings of Meighen were even less complimentary. The AI grabbed the balding and blotchy skin from my instance images, elongated her face, and thinned out her hair. Meighen would not be happy about that one.

The following Baseline rendering of both subjects together fared no better. Although the likeness of Meighen generated by her token was closer to the instance images, the token trade of my likeness did not create images that looked anything like me. Instead, they are a bizarre combination of Benedict Cumberbatch and Ari Fleischer.

From here, I ran through some of the prompts I had used in previous trials when generating inference images of single subjects, this time with couples. I wanted a few reference images so that I could compare the output of each custom Stable Diffusion model.

Trying to build up from a baseline rendering by constructing prompts that emphasized features in the end images that more distinctively described each subject seemed like a challenging strategy.

Fashion Photographers in the Prompt

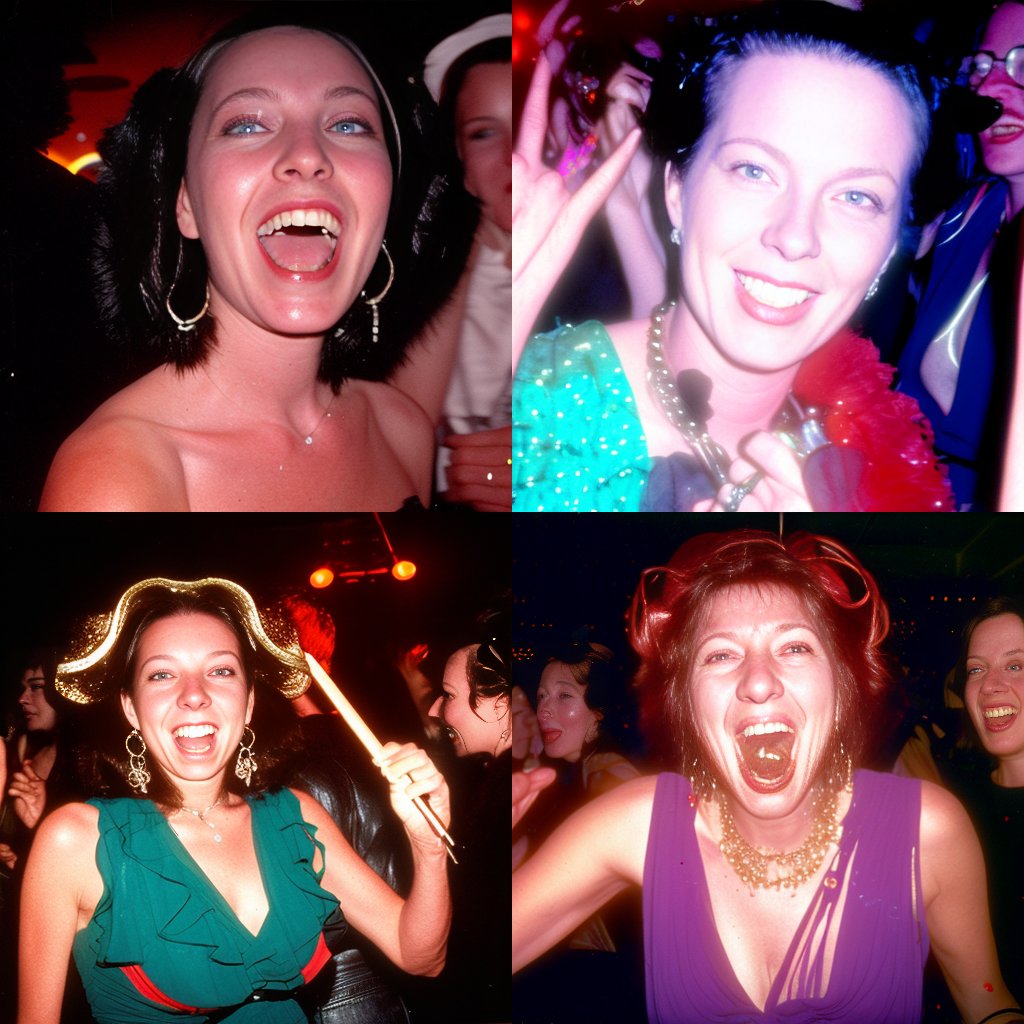

An idea I had to get better renderings was to use the names of fashion photographers in the prompt. Fashion photographers tend to take pictures that make their subjects aesthetically pleasing. This didn’t work out. I moved the parameters all around, but didn’t get anything good. Outside the context of Studio 54, the strategy paid off with some pretty good images.

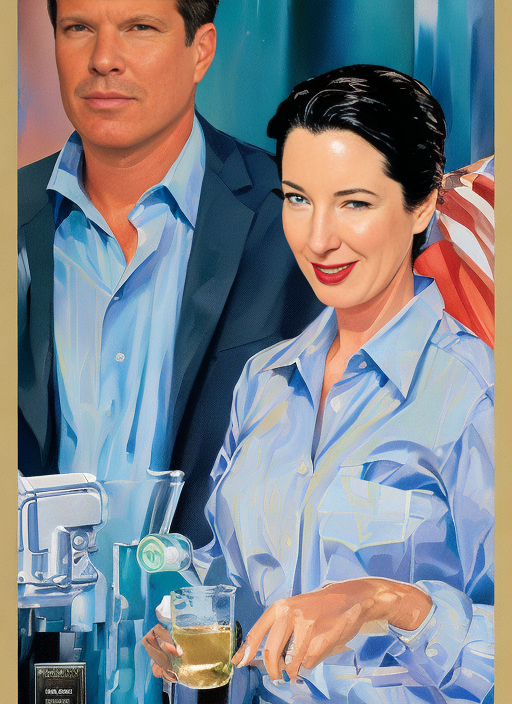

Movie Key Art

Steps: 40, Sampler: DDIM, CFG scale: 5

After warming up on the baseline prompts, I was ready for the main program

Anthony and Cleopatra

The first rendering of Antony and Cleopatra, although it’s better, didn’t look anything like us. Jacking up the CFG scale didn’t help. And the AI seemed reluctant to render images of both subjects in the frame. I attempted this prompt many more times I finally got something close to like this but ultimately not satisfactory.

After thrashing about for quite a bit, I returned to this prompt later in my session. And I got an image that had a render closer to my likeness and more of the composition I was going for.

Dr. Evil And Frau Farbissina

This prompt yielded no better results than the previous one. the woman looks like neither Meighen nor Frau Farbissina. Aside from being bald, the male character looks neither like Dr. Evil nor me. I appreciate the unprompted latex bodysuits </snark>, but it was not what I was going for. As an aside, staple diffusion 1.5 seems to be a bit horny. When prompted with words that indicate superhero or sci-fi fantasy, The AI puts the characters in a skin-tight bodysuit. Sometimes that’s what one is going for. Mostly not.

Satine and Christian in Moulin Rouge

What I was going for with Satine in a crystal leotard like in the movie End Christian in ordinary clothes of the time. I got imagery steeped in “dark circus” regalia, with little reference to either character.

Anna and the King

I tried various prompts, and although I got some of the Dynamics of the show in the movie, I could not get the AI to render a good likeness of either Anna or the king. Or even someone who looked vaguely like Yul Brynner or ethnically Thai.

Gomez and Morticia Addams

I had more luck here because I had been trying to get good renters of these characters for the Christmas card experiment (link). The token notMeighen makes it pretty good Morticia and renders consistently. Unfortunately, the token trained with my instance images did not generate so distinctively as Gomez Addams, although I got some pretty good pictures.

Walter and Hildy from His Girl Friday

I thought a mainstream 1960s rom-com would less likely generate characters wearing shiny leather. I didn’t have to do much prompting to get a clean, realistic rendering that was an excellent likeness to the trained tokens. Nonetheless, the influence of the features of the actors in the movie on the rendering influenced the final image. I tried a combination of emphasis on different words in the prompt to get what I thought was the most pleasing of the rendered images.

Bridget Jones and Mark Darcy from Bridget Jone’s Diary

This prompt suffered the same challenges as the last one. The distinctive features are the actors playing the characters tended to overwhelm the training are the AI model.

the Joker and Harley Quinn

Even though I referenced the movie title “The Suicide Squad” in the prompt, I got a lot of shiny bodysuits. There is not a lot of Spandex to be seen in The Suicide Squad, yet still, the AI rendered tight leather. And the prompt overwhelmed the token training.

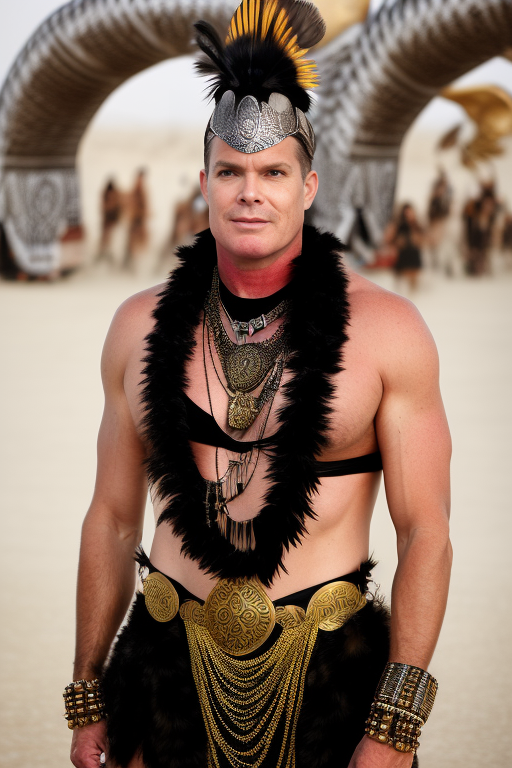

Burningman Prompts

I’ve spent a lot of time at Burning Man, but I haven’t been able to go for the last three years. The first two years, because of covid, and the last year because I could not get tickets. Being able to render AI-generated images of Burning Man satisfied a desire for the Burningman experience. The kind of costumes and outfits the AI can generate excites my imagination. However, to get something special, you need to add a lot of qualifiers for the prompt words. The AI seems to have been trained on the most quotidian of outfits. Here are my attempts to whip up something fabulous. I also found that the model could generate fairly close likenesses of both subjects in this context. The AI may understand that these two characters belong in that context. The AI can be extraordinarily generous in it’s renderings even when it prematurely ages you.

I’ve become uncomfortable with some of the renderings being an idealized simulations of what the subject actually looks like. Am I doing harm in some way given the societal environment where people feel such pressure to be attractive? Many girls including my own experience body dysphoria from the constant assault of digitally airbrushed social media imagery. I think it may. However, I don’t feel so bad about synthetic Burningman imagery, because the whole event is fantastical.

The nature of my projects so far has been to see if I could generate likenesses from custom trained models so the results I have been looking for have trended towards more regular seeming images. In the future I am going to strive for more baroque creations that push the envelope of what is conventionally aesthetically pleasing.

What’s next

Interestingly, I got the most responses to this series have been when I articulated why I was so interested in AI-generated art. Specifically, that Ai image generators give me access to technical mastery that was beyond my grasp. In addition, working with the models has given me an understanding of style on a deeper level than I have ever experienced.

I am intensely curious about what kind of changes AI-generated art will wreck on the visual arts industry. I am using the tools in my creative process to learn more and see where they add value. Notwithstanding the fact that I am more concerned with marketing strategy than visual expression at this point in my career, I am an artist at heart and continually create in various media to further my professional and personal initiatives.

Therefore, in the following articles, I am going to focus on intention. Ai image generators can make pretty pictures of all kinds of things. Of course, that is what they are designed to do. What’s of value to me is if they can generate the specific something that I am trying to visualize and create. With that in mind, I plan to employ AI image generators in my workflow. To find out where they speed up and improve the process.