TLDR; Tried to create an AI generated holiday card. Didn’t work out.

The challenge of AI generated images is that they are great for creating fully-realized images if you aren’t that particular about what the subject is. If you want something specific, AI image generation is a bit more problematic. Accordingly, I have been focused on creating self-portraits. After coming across the process for fine-tuning Stable Diffusion with a custom token, I trained up models with images of myself.

With this token in the can, I then tried to generate pleasing photos of my token in specific scenarios and styles. You can see my mixed results so far. In this latest experiment, I trained up token for each of the people in my family. The specific intent was to create a humorous holiday card.

The recipe for training up a token using Dreambooth and Stable Diffusion is in LastBen’s github depository here. You can run the training and create images for free on Google Colab here.

Preprocessing the Instance Images For AI Generated Holiday Card

First, I cropped all the images to square in Photoshop. I cropped each image manually to center the area of focus, in this case usually the face of the subject. Next, I recorded an action for resizing to 512 x 512 and saving. I then used a batch automation process to use the recorded action and override the save action by naming all the images after the token. I set up a scheme in the Photoshop batch dialog box. So I would up with a folder of images named notanne.jpg, notanne(1).jpg, notanne(2).jpg etc.

Contact Sheet of Instance images

Training Parameters for Dreambooth

- Instance images

- 31 Anne

- 34 Zane

- 51 Meighen

- 71 Sabin

- Base Model: Stable Diffusion v1.5

- Trained for 12,000 steps

First AI Generated Images From The New Model

My thinking here was that naming a particular artist seemed to have resolved human features in previous inferences. Not so much here. The features from each token are blended, and it seems like the first two tokens mentioned get most of the weight.

Disappointing Results

Few of the people rendered are a very good likeness of the person trained on. There is a lot of blending of the features of the people. At this point I started thrashing. Here’s some of the results just for fun.

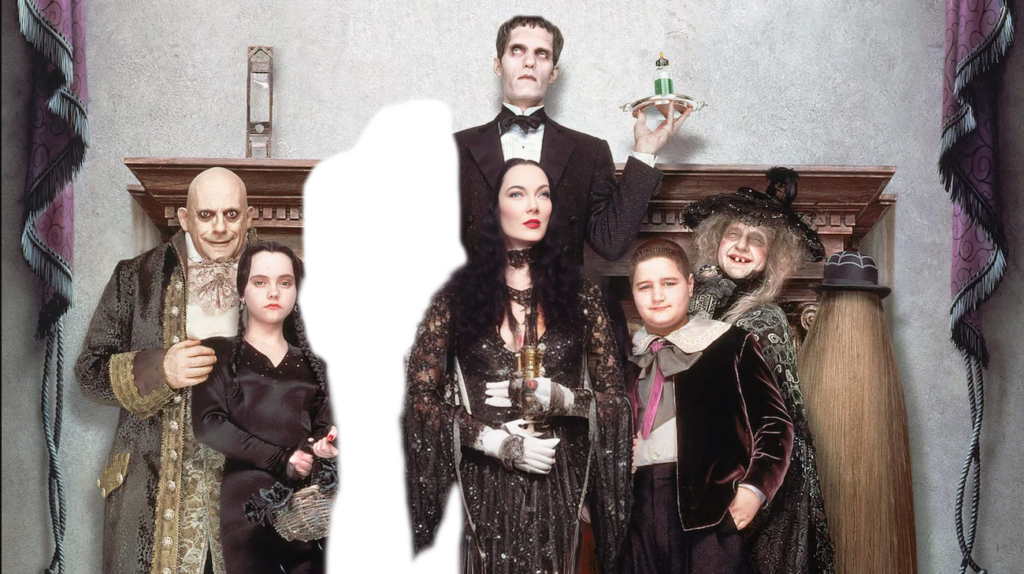

I had dreams of creating dozens of holiday scenes and running a series of holiday cards on Moo.com. Each card would be unique. I could use Stable diffusion to generate scenes. Alas, it was not to be. One of the concepts was to make a scene of the Speiser family as the Addams family.

AI Generated Addams Family

I found an image on Google of the Addams Family from the 1991 movie.

My first img2img with text prompt yielded something less than I was looking for. I’m sorry now that i didn’t save the image. Instead i decided to try inpainting. I took the original image into Photoshop and masked out the one character a time.

The Final Result

Appropriately weird, but lots of artifacts. I don’t know where the guy in the glasses right over Gomez’s shoulder came from. I’m sure with several more runs, I would refine this process and get more interesting results. The whole process however was taking too long and I lost interest.

Some Thoughts of Creating What You Have in Mind

The amount of manual work to create something like I had envisioned was as much or more than what I could have composited manually in Photoshop. In Photoshop, I would also have a lot more control over the imagery. This is a idea that I have run into several times when working with generative AI. You can create surprisingly finished and developed images if you are not too picky about the exact subject or composition. When you want to make something very intentional that doesn’t yet exist, the tools are unreliable. They require a lot of technical work like masking and prompt engineering.

A Lot of Work Goes Into AI Image Creation

There is a lot that goes into creating a specific and intentional image. Although you can point your browser to Lexica.com or Huggingface and start banging out images, to create something specific and intentional requires quite a bit of doing. You need to set up your own instance and train it on the subject and/or style that you want to create images of. From there you need to set the parameters: steps, scheduler and tweaks. Finally you get to the prompt.

Prompt Engineering

The prompt is a whole discipline in itself. Prompt engineering is the valuable skill. In the version of Stable Diffusion that I am using v1.5, the model was trained on specific artist styles. The tokens for these style bring with them not only the brushwork and color sensibility of the artist work but also some of their composition. These artists styles have served as a kind of shorthand. Now with the release of v2.1, the artists work have been disassociated with their name in the caption, so that invoking the artist no longer brings their style to generated images. (If I understand this correctly)

You can still prompt for the flavor you are looking for, but you have to do it generically. The universe of prompts is infinite and the combinations of prompts that yield particular styles is not obvious. That’s why users have created custom models that more reliably yield the results that they are looking for. Aside from the stock Stable Diffusion releases, there are countless fine tuned models (such as this one) as well as a plethora of embeddings and hypernetworks that individuals users are employing to create images.

Life After Rule 34

An amazing amount of these custom models, embeddings and hypernetworks are focused on creating pornographic anime characters. This use case has animated the work of early adopters of every new technology. However, once we get past the initial flush of horny enthusiasts, I can see evolving a real value chain of experts who can help engineer prompts that will reliable yield images in certain compositions and styles.