Third experiment creating a self portrait using an AI image generator.

In my second experiment, I attempted to create self portraits using by fine-tuning the Stability Diffusion AI image generator Using Dreambooth on Google Colab, I trained a token for myself to use image prompts. (See how to create art from your face for free, here) You can do this yourself. It is free and easy on an ordinary computer. Watch one of these videos to see how it is done. The beauty is that you don’t need a 24GB GPU or even a 12GB, you can do all the processing in the cloud on Colab.

Creating a “realsabin” token

I created a token called RealSabin and trained the AI on 20 images I selected. There was little method to the training images, I scrounged up pics I had on my hard drive with very little filtering. My criteria was that they be complete images without too many extraneous shadows and without other people. However, they were all basically snapshots. You can see the results in my post, Generative AI – Self Portrait.

Learnings from last experiment

- A lot of the inference images didn’t look me. So, I wondered if having more images of my features with consistent lighting might help.

- The AI was very generous in how it portrayed my apparent age in the last go around. I used all contemporary images in this training set.

- The AI was pretty good at headshots with a smile or neutral expression but seemed to mangle the face if there was any emotion. I put together a whole panoply of emotion images for training data. Of course, the AI image generator did not have the text-image pairing, but I wondered if perhaps it could relate model it had for happy to my smiling images.

I don’t have that solid of an understanding of how the text to image transformation works, so all of these hypotheses and strategies could be stupid.

What happens when you use 200 images to fine tune the AI image generator with Dreambooth and Stable Diffusion?

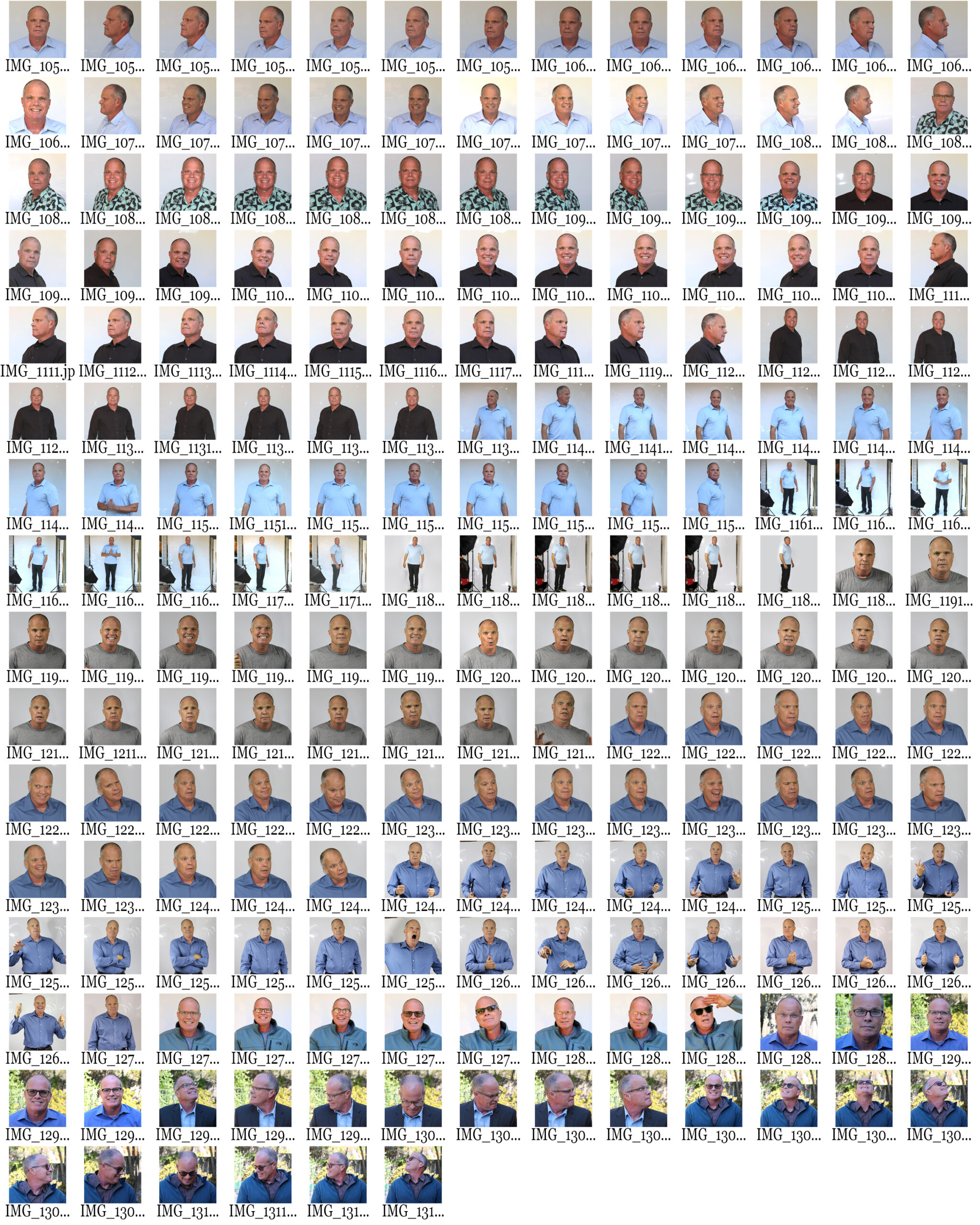

- Used 200 images all shot specifically for this purpose.

- All images contemporary, shot within the last 3 days.

- Headshots, torso shots, body shots

- With glasses and without. Several different pairs of glasses.

- All wardrobe was blue, brown or gray. I’m toying with making color one of the dimensions of my personal brand. And the color scheme of light blue and brown is the scheme.

- I tried to get the lighting consistent. I was working by myself and I had some trouble with the exposure shooting against a white background and not having a lighting model. I bought a remote after shooting this series, so I won’t have this problem in the future.

- You can see the breakdown below in the contact sheet.

Ai image generator training images

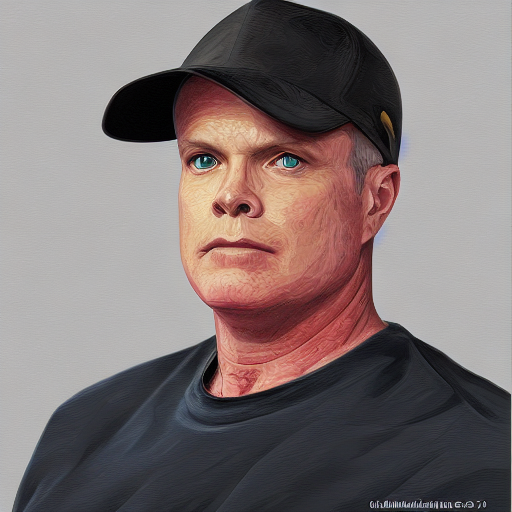

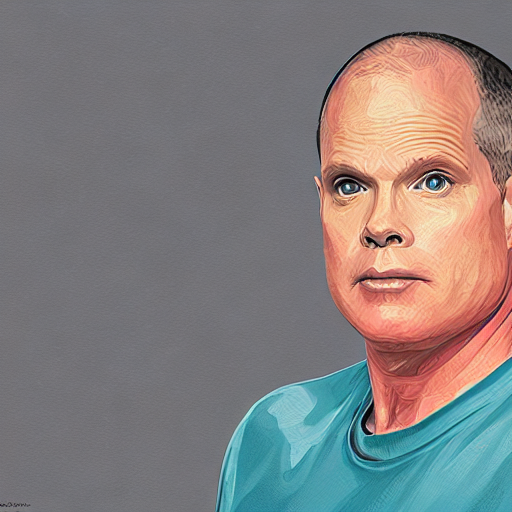

First results

I started out with the default prompt in the notebook. I configured the inference console to generate 4 images at 512px x 512px.

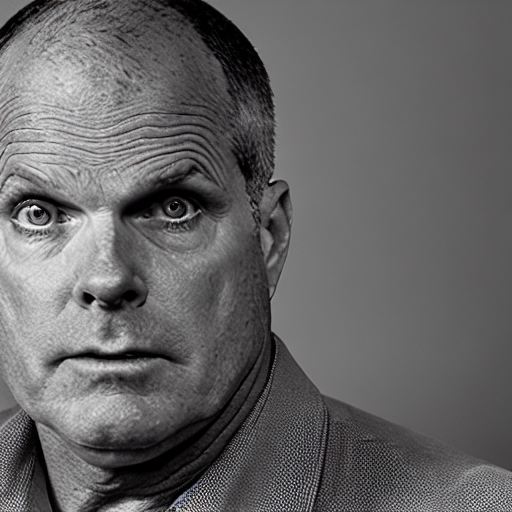

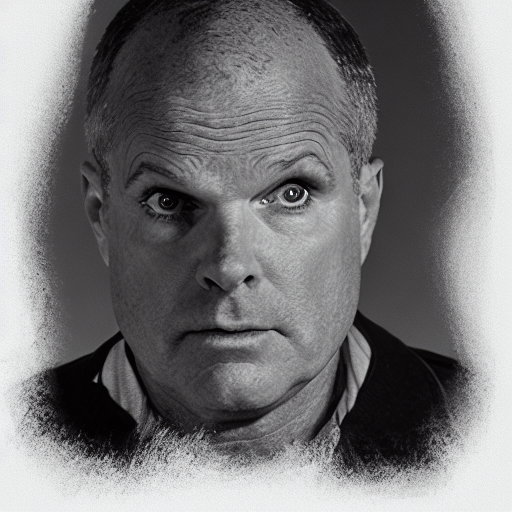

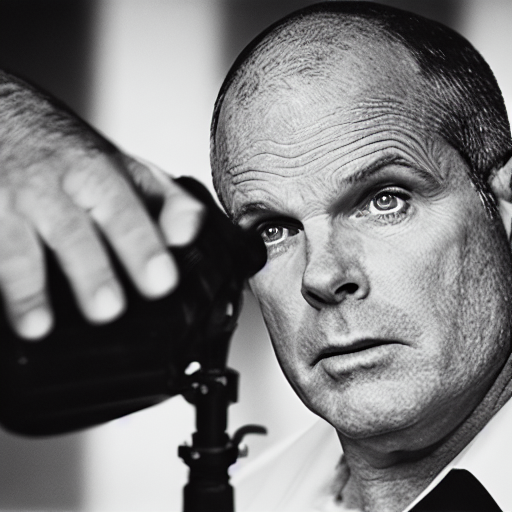

Results: A lot better likeness than the last go-around trained with only 20 images. Not sure what the gigantic forehead is all about. In these three images you can see the problem that plagues almost every image. Weird ass eyes.

The problem of weird-ass eyes.

Many of the AI generated images on Stable D, MidJourney and DALL-E suffer from weird rendering 0f eyes (and often hands). I’m sure there is an explanation for this, but Stable D really mangles the eyes on otherwise very accurate likenesses. Another guy is using a tool on Tencent’s ARC site called Face Restoration to correct for the eyes. Face Restoration was designed to use AI to restore damaged photographs and does a good job at fixing the eyes. I ran most of the Stable D outputs through it and composited the just the eyes. Some of the images I’ll show I’ve done this to. (It will be obvious)

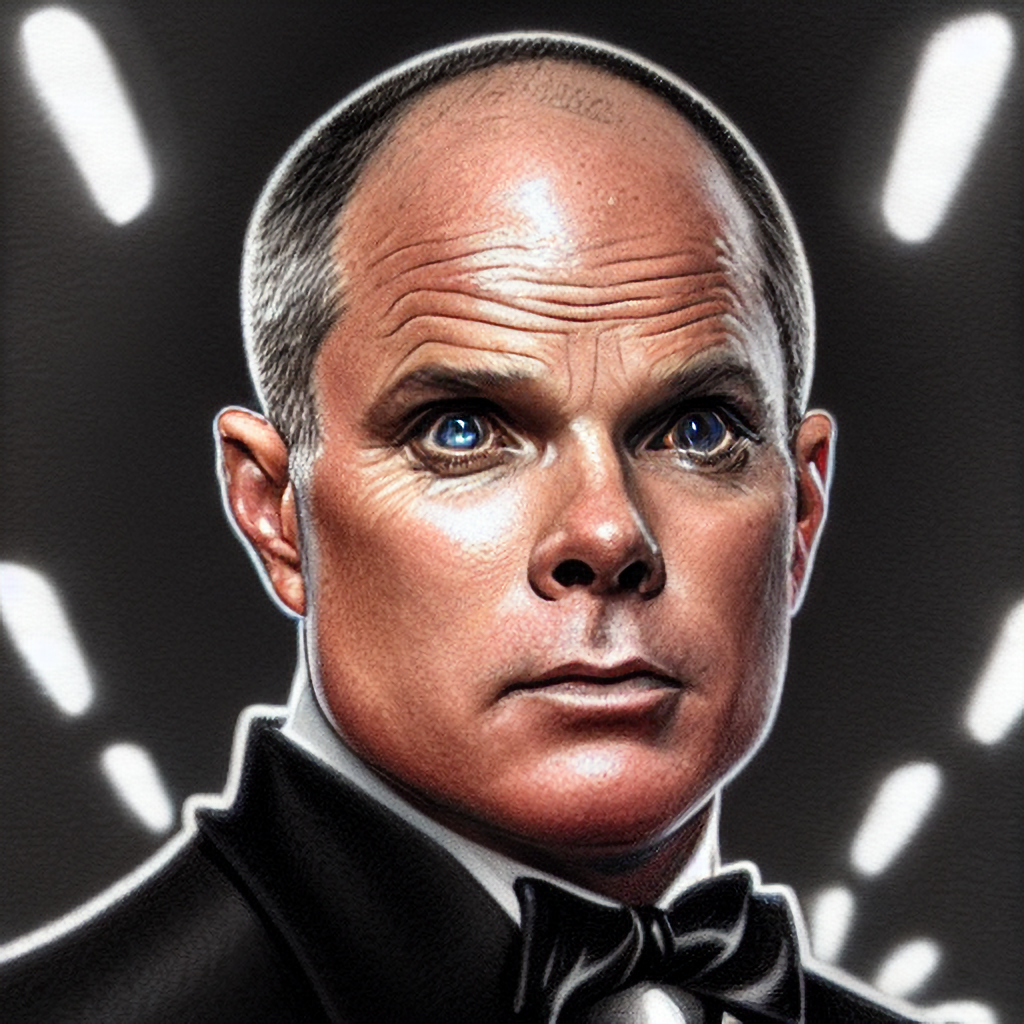

At Studio 54

Who doesn’t wonder what it would have been like to be in the glitterati of New York’s swinging 70s? , I prompted the model with some historical context.

The poses and camera angles all evoke the scene pretty well with my likeness.

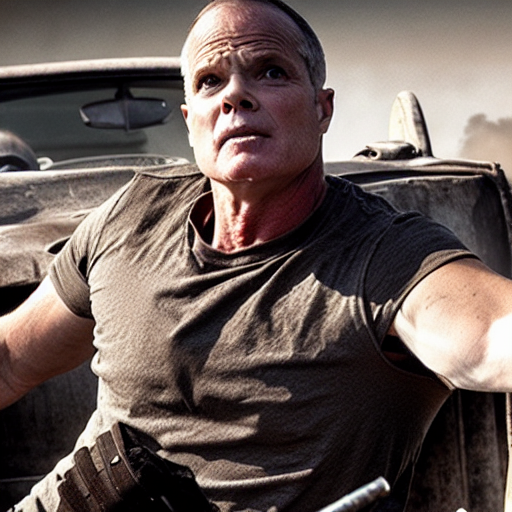

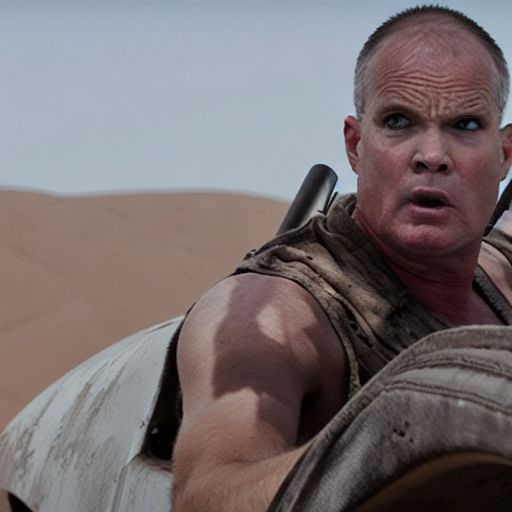

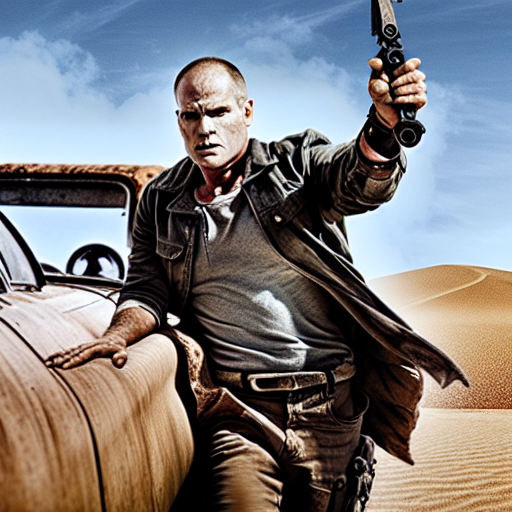

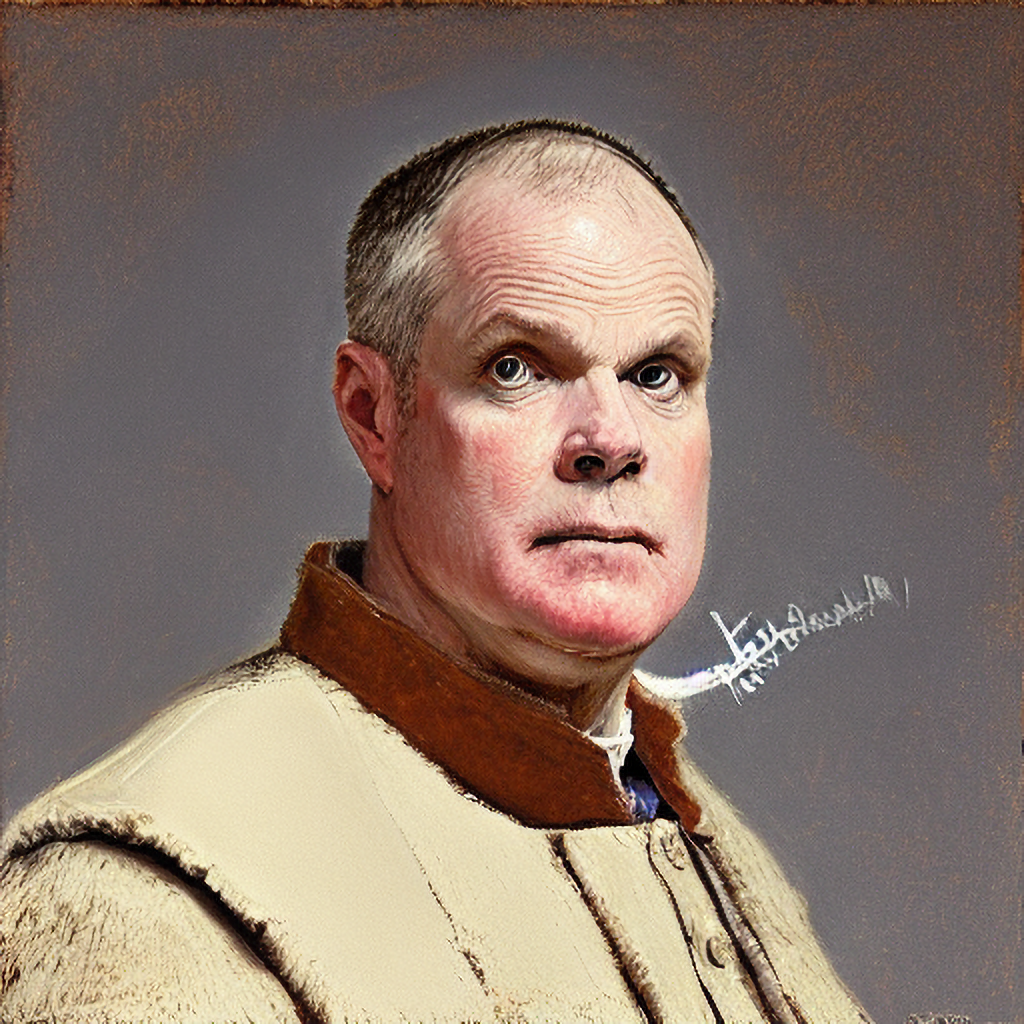

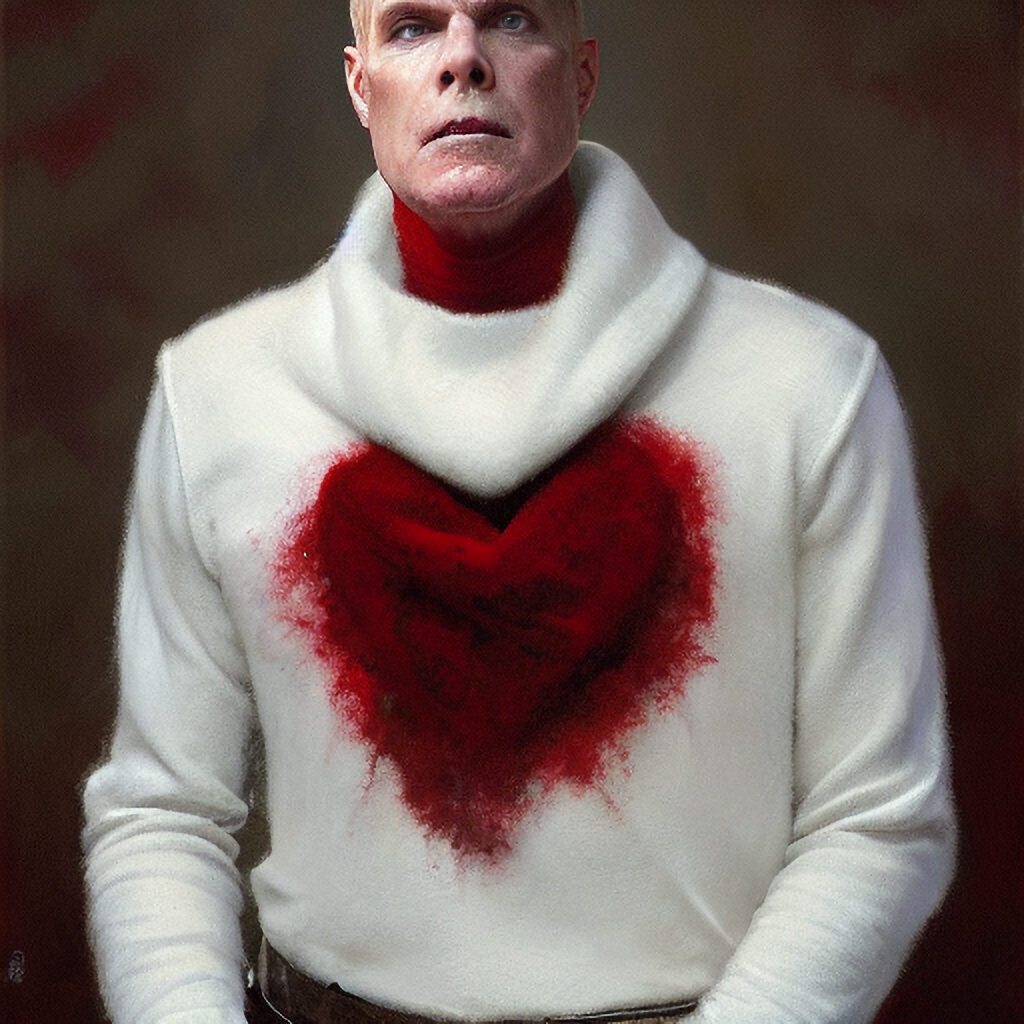

Movie Key Art

Not bad likenesses at all.

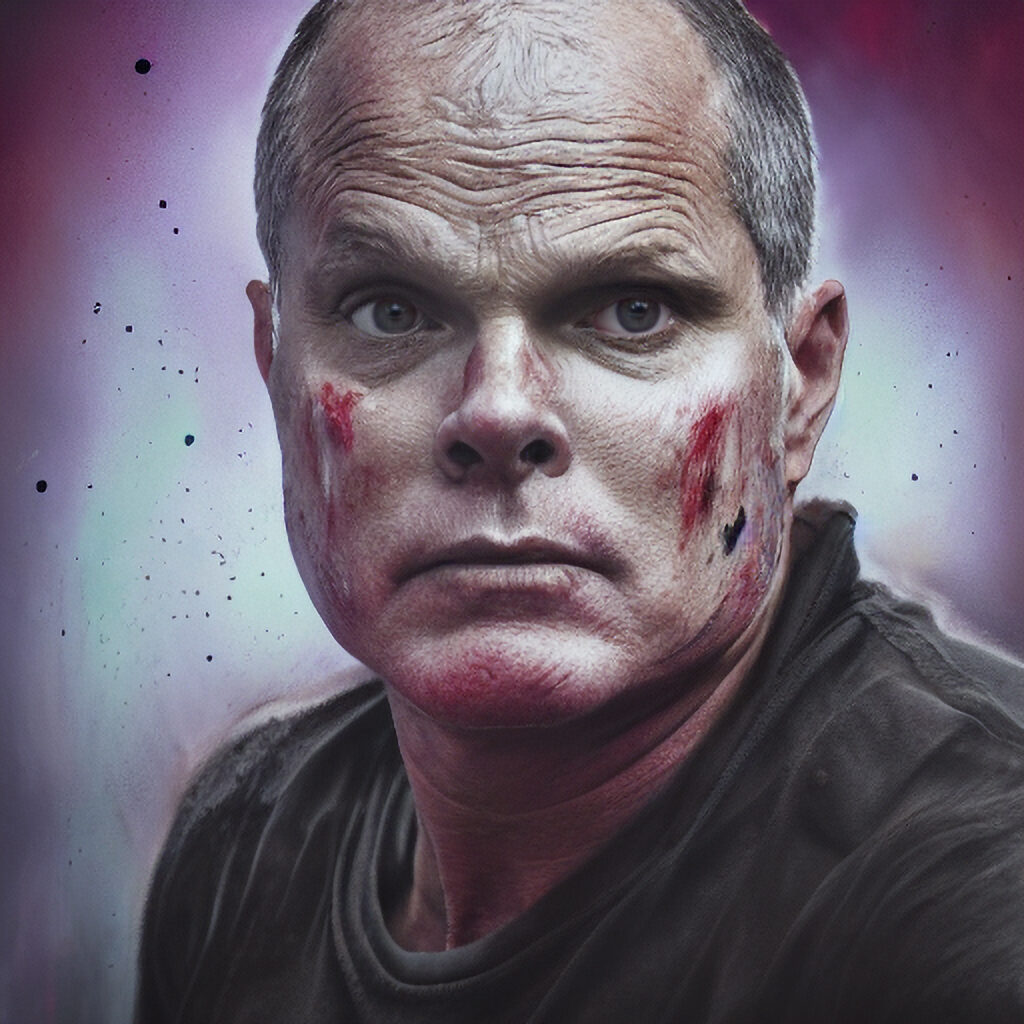

Specific movie key art

In addition to eyes, Stable D has a problem with hands.

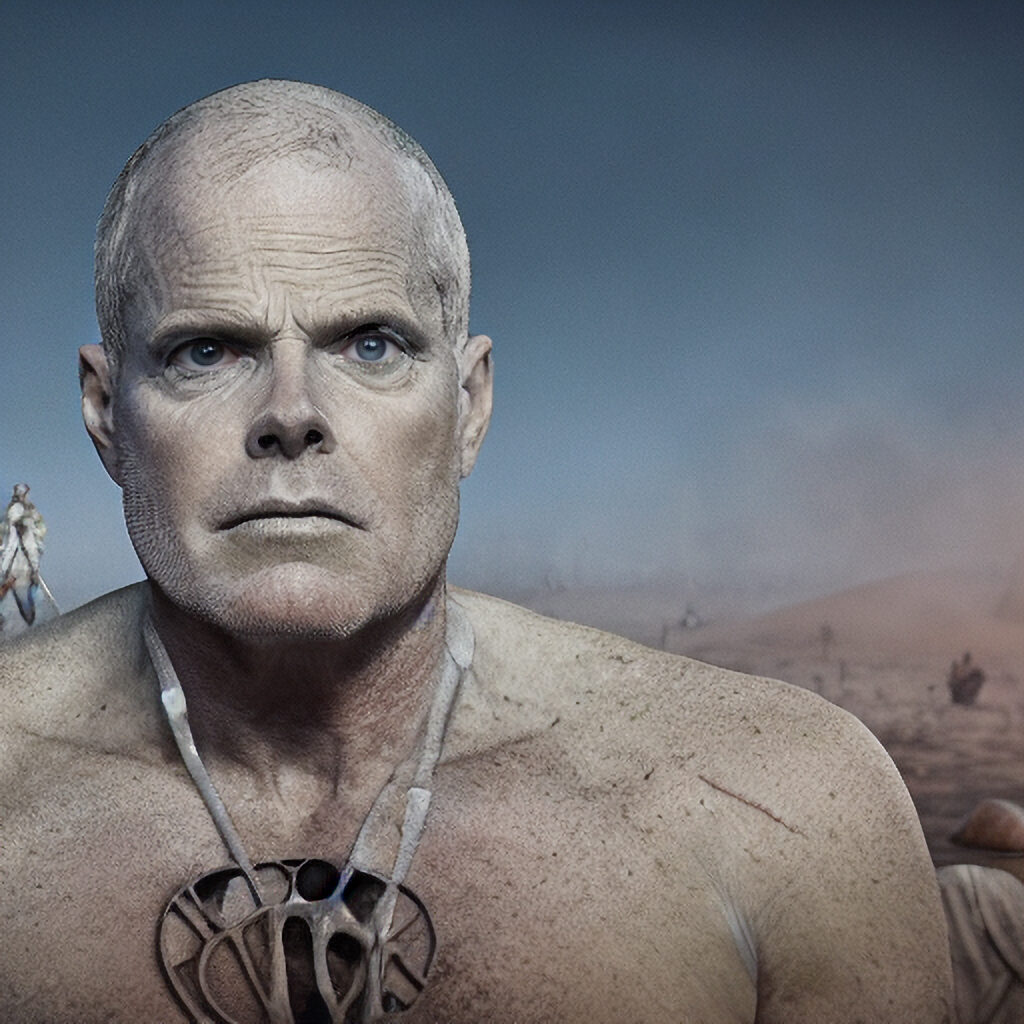

Actually in a specific movie

Mixed success here. Some generative AI images were really good. The ones of Fury Road worked out really well. That might have something to do with George Miller’s cinematography. His style is to always keep the subject in the center of the frame. This might have led to a lot of congruence between the model it had for Mad Max and the images with similar composition in the training set I used.

Gender-bending dating site profile pic

I wanted to see how the fine-tuned image generator did with changing the apparent gender. In the first run, the AI spit out three images of my likeness as a man, even though I specifically prompted with the class woman. On the second run I added more adjectives that tend to be used only with women to see if that influenced the rendering..

I think it did, but it weighted the prompt toward those adjectives and gave my token less weight so the images of women don’t look much like me.

Adding ethnicity to the AI image generator prompt

Noice.

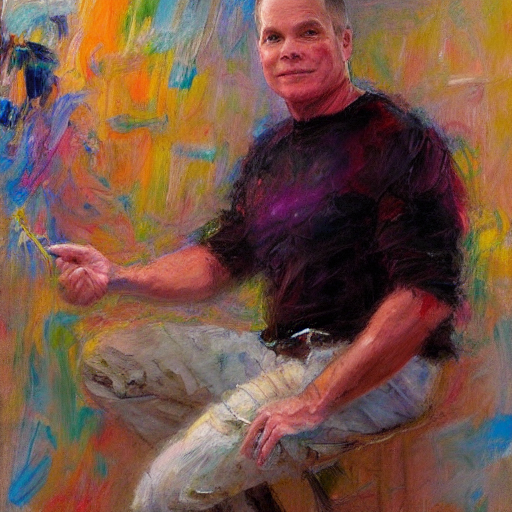

Annie Leibowitz and Rembrandt

In my last experiment when I prompted the AI image generator with the name of the artist, instead of a work in that artist style I got the artist themselves. Not so in this go around. On prompts of Leibowitz and Rembrandt, I got a pretty good likeness. On the prompts with multiple artists, I got some salient features of all of the artists mentioned.

Freestyling with prompts on Lexica and MidJourney

Having covered a lot of the prompts that I ran in the first two experiments, I decided to freestyle a bit and see what more involved prompt yielded. The Burningman photo was one of the best I’ve seen (after I fixed the eyes.) The other two images are evocative, but don’t look like me.

Film Noir

The AI didn’t really get mischeivous or film noir. Still some good images.

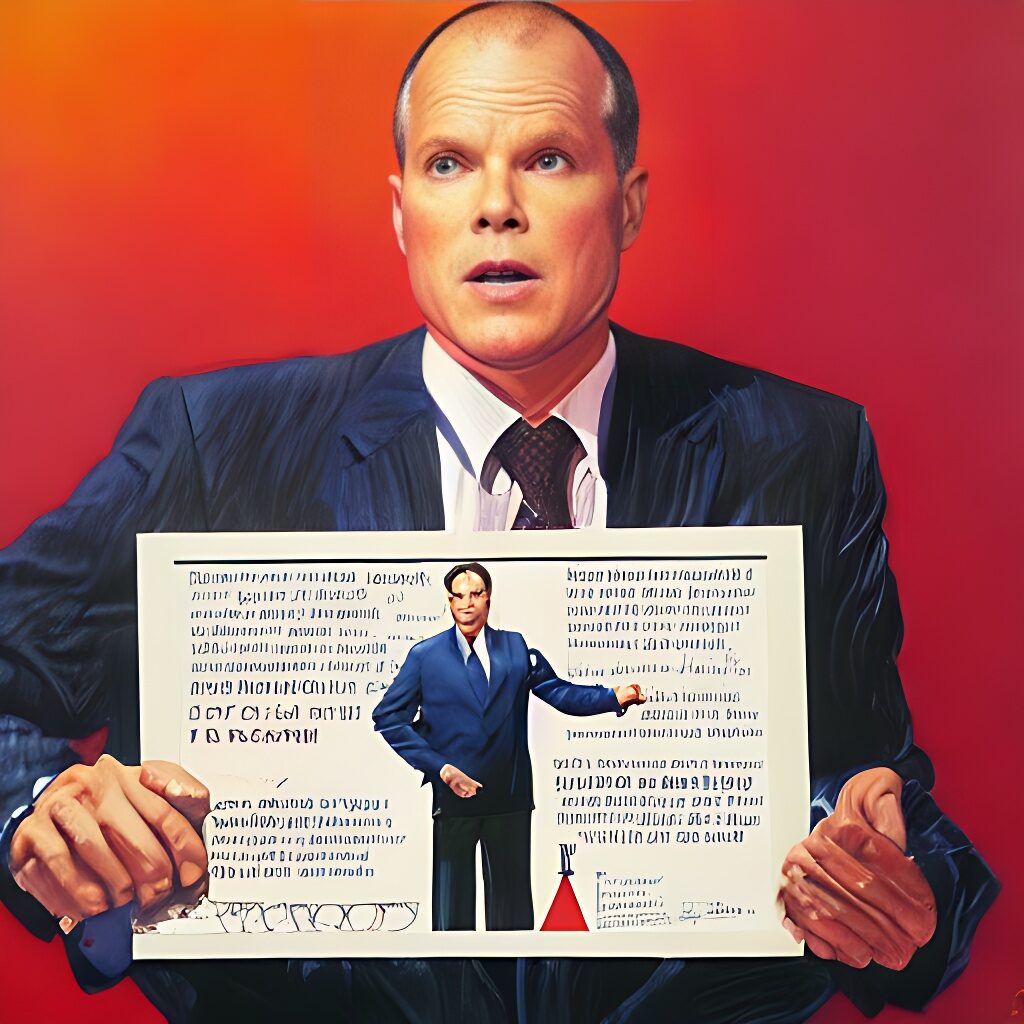

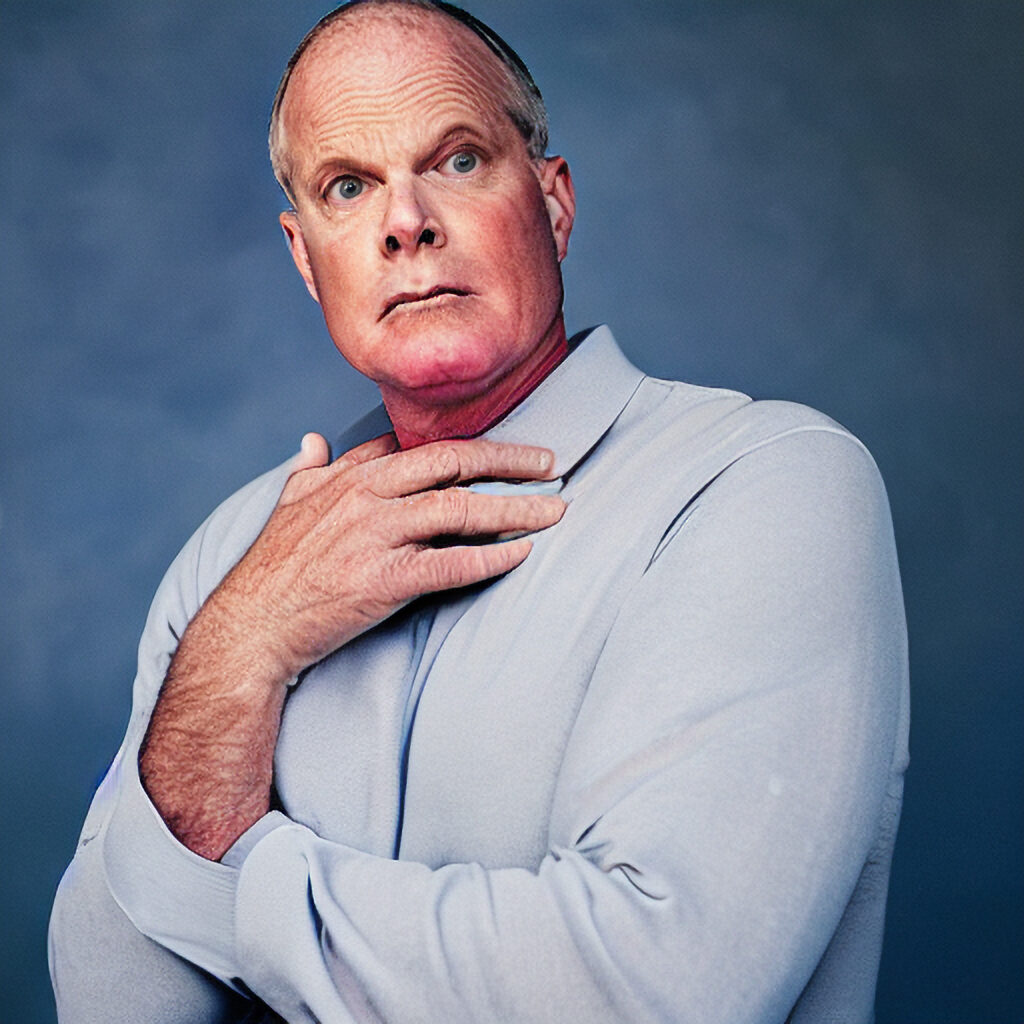

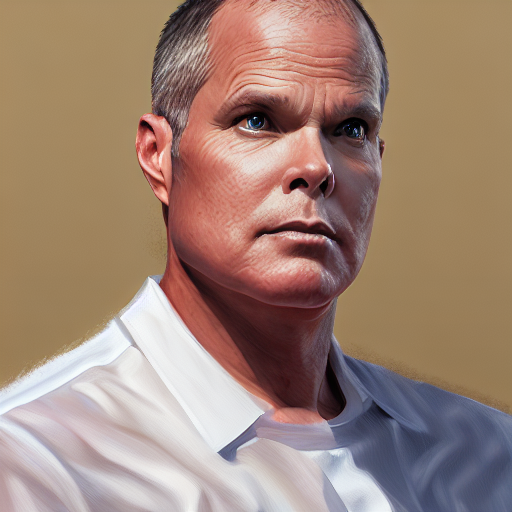

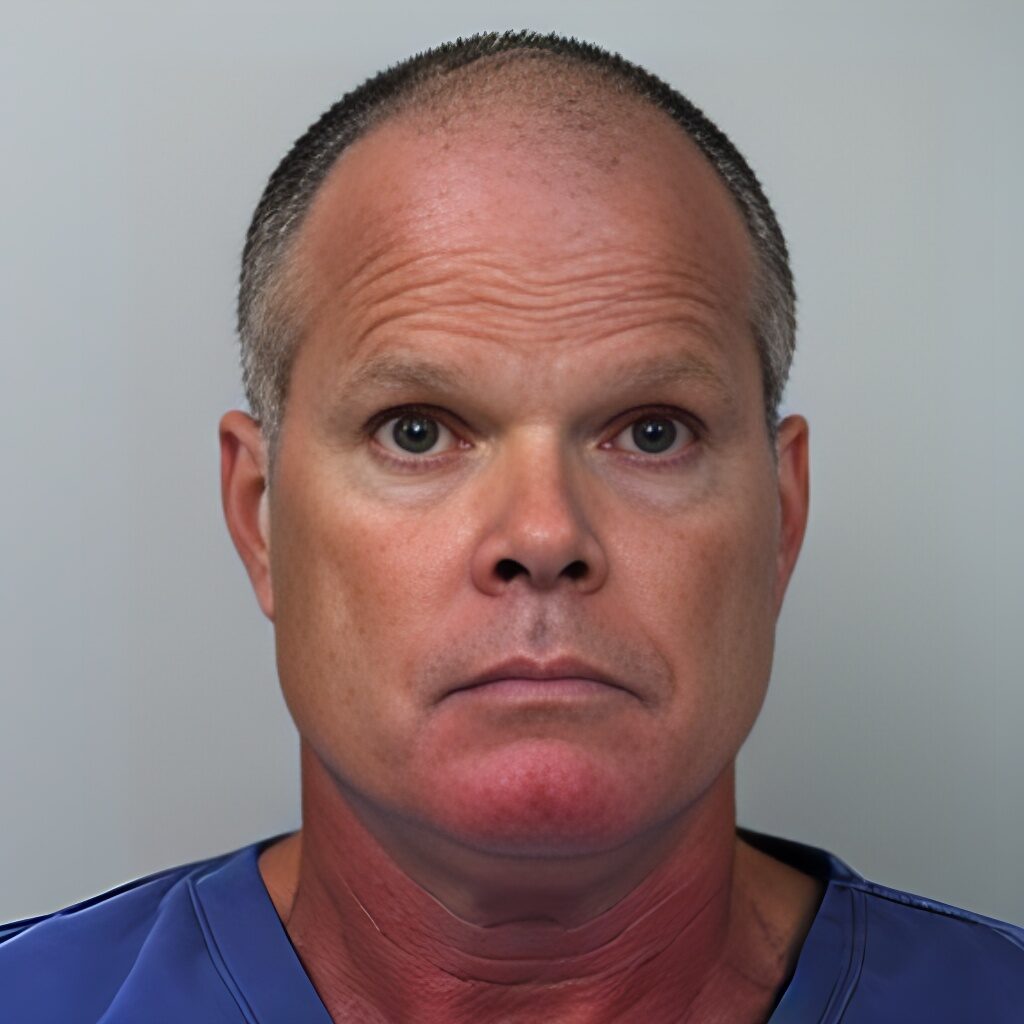

Back to the boring stuff – Corporate headshot

The main use case I was trying to test AI generation for was the creation of headshots for corporate purposes. My fine tuned model did some pretty good stuff, but not really close enough where I could use it and no one would sense it was off.

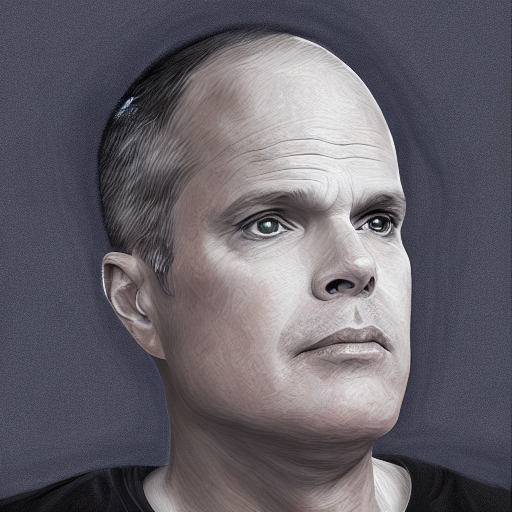

Conclusions

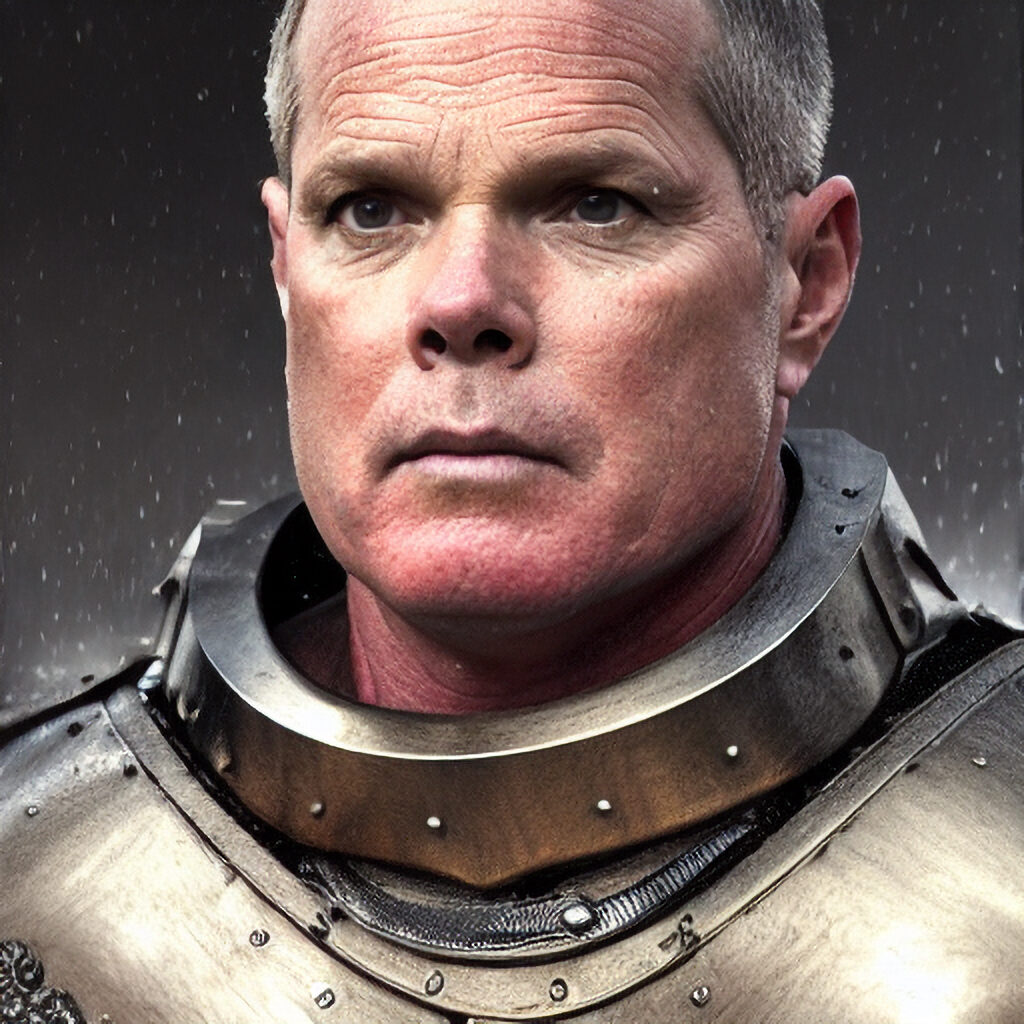

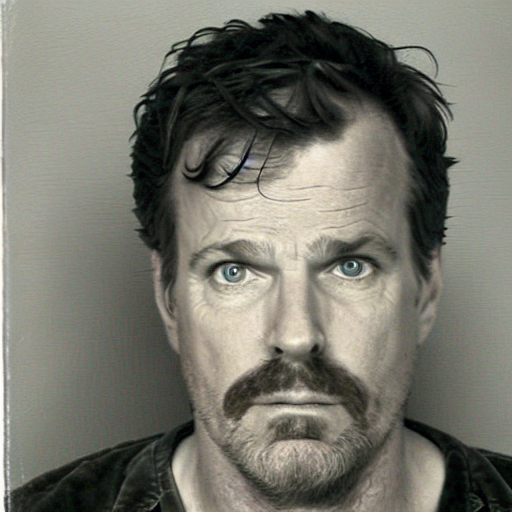

My theory was that more images would yield better likenesses. This proved to be true but not across the board. Perhaps I gave too many images of the same composition and angles. There was not a lot of melding of visual concepts that would satisfy the prompts. I think perhaps all those images lead to over fitting without leaving much room for other concepts. For example, look at these two images:

The first conveys to me a lot more of the characteristic despair that I see in most mugshots. The second one has very little emotion. It seems like the AI leaned a lot more heavily in fine detailed features of my likeness than the emotional cues of the mugshot style.

The next AI image experiment

For my next experiment, I’d like to see if I can broaden the range of results that the AI can produce by giving it more salient features for realsabin model. My strategy is to use fewer clinical headshot images and introduce some other types of images. Staging these scenes takes a lot of time and effort, therefore, I’m going to use fewer photos in general.

Training set of 100 images

- Headshots high, low and eyelevel ; range of angles to camera

- Torso shots high, low and eyelevel ; range of angles to camera

- Full body shots range of clothing, overcoat, suit, sportscoat, polo/chinos, tracksuit, shorts/hoodie, swimsuit, wetsuit, tshirt/shorts, running clothes

- Range of emotion shots

- Candids and snapshots

- Earlier photos with a beard and mustache

- Costumes: Pirate, calavera (dia de los muertos), kabuki actor, rocker, latin band, wild west, skeleton, batman, stormtrooper, santa

- A range of headgear fedora, beanie, baseball cap, bowler, straw hat, cowboy hat, scarf, watch, gloves, headlamp,

- Action shots doing things like working on a computer, writing, reading, cooking, gardening, walking , running, yoga, sleeping, eating.

- Range of motion shots: jump, squat, forward bend, child’s pose, upward dog, downward dog, staff pose, tree pose, proud warrior 2, savasana

Additional Consideration

I wear glasses most of the time. That’s why I’m going to shoot about half of the images in every set wearing a range of glasses. To expand the range of contexts that the AI will consider, in about half of the images I’m going to replace the background with a variety including: the beach, startup office, urban outside, rural outside, party, nightclub, meeting, suburban street, living room, kitchen, bedroom, I will also include 10% of the shots shirtless. My thinking is that this will give the AI more freedom to come up with solutions that include the salient features of my likeness and other concepts included in the prompt. Look for the next post in the series.

Other posts in the series

Part 1: AI Generators and Self Portraits

Part 2: Generative AI Self Portrait

Part 3: Generative AI – Self Portrait #3