Second attempt to create a self portrait using generative AI.

In my last experiment, I attempted to create self portraits using the generative AI Midjourney. Through a combination of image and text prompts, I attempted to get the AI to generate a recognizable result – with poor results. For my second experiment, I used another AI image generator trained on a set of photographs of myself.

Create art from your likeness with Dreambooth and Stable Diffusion on Google Colab

You can do this yourself. It is free and easy on an ordinary computer. Watch one of these videos to see how it is done. The beauty is that you don’t need a 24GB GPU or even a 12GB, you can do all the processing in the cloud on Colab. Thanks to James Cunliffe for the helpful write-up.

Train the generative AI on your own pictures

You train a token for yourself that you use in your prompts for generating images. I created a token called RealSabin and trained the AI on images I selected. The articles I read initially said you need around 20 photos to train the AI. Some said they could get recognizable results with as few as 3-5. After reading more about it, 200 images is closer to the ideal for fine-tuning the model.

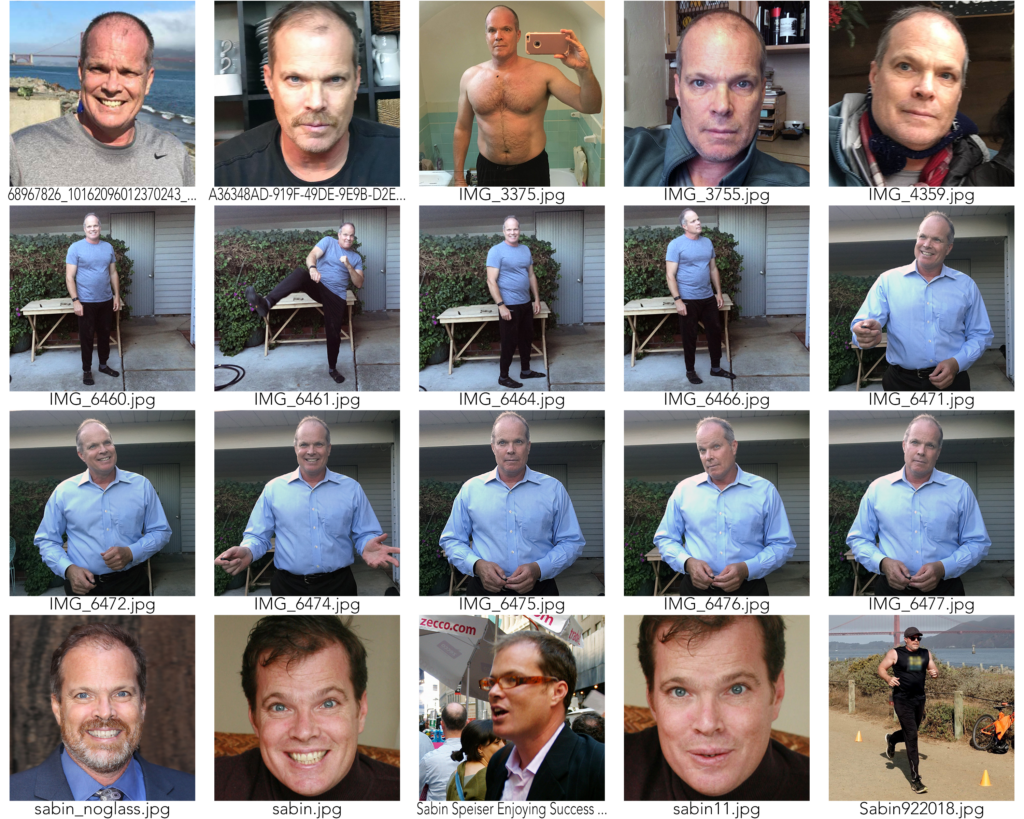

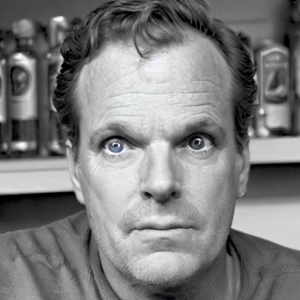

For this experiment, I put together 20 shots, a range of closeups, medium torso and full body. Some of them I created specifically for the task. I shot most of the torsos by set the camera on a tripod on my front patio and used a remote control to trigger my iPhone 6S Plus. Others images were snapshots from my photo library. Most photos were from fairly recent vintage, the oldest was from 2006.

Training images

First results

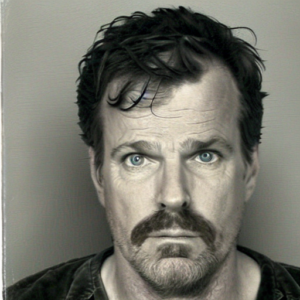

I started out with the default prompt on the notebook.

Not really what I was looking for. About the only thing that resembles me is the furrowed brow.

Gender-bending on the Tinder profile

If the most basic prompt didn’t give me what I want, why not stretch a little with a gender-bending Tinder profile photo. My partner would object if I had a Tinder profile, but I understand that good photos are essential to success on the platform. Great opportunity to see how the AI dealt with a different gender prompt. I tried a specific context, “prompt: attractive realsabin female profile photo 55-years-old”. Stable Diffusion seems to make every rendering a lot younger than the photos I trained it on so I added 55-years-0ld to the prompt. The results.

Stable Diffusion tends towards Asian faces, if you don’t specify

What’s going on here? First off, I needn’t have added my age, because Stable D has me looking to be in my mid-70s. Secondly, with no prompting from me, the AI made me a large breasted Asian. I appreciate the AI’s generosity with bust size, but I was surprised by the assumption of genotype, It t turns out that Stable D biased the algorithms to deliver Asian faces if the genotype is not specified. I assume to compensate for under weighting of Asians in its image training. data.

All of the pictures i trained my token on are of me, a fairly typical light-skinned Northern European. I think I look great Asian, but my goal here is to generate good likenesses of myself. To accomplish this, I started putting “Asian” as a negative word in my subsequent prompts. Irrationally, I feels uncomfortably racist doing so. Here is a another rendering without the negative prompt of “Asian” and you can see how the AI tries to split the difference between my features and skin and those more typically Asian.

Here’s the same prompt with negative prompt of Asian:

Tinder profile pic correcting for genotype

I tried another way of counteracting the Asian bias. Instead of using the negative prompt, I tried using an affirmative genotype. Once again the AI aged me harshly, but I have to admit, she does look like me.

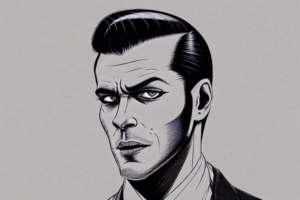

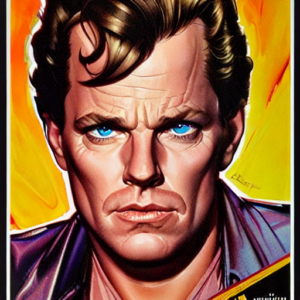

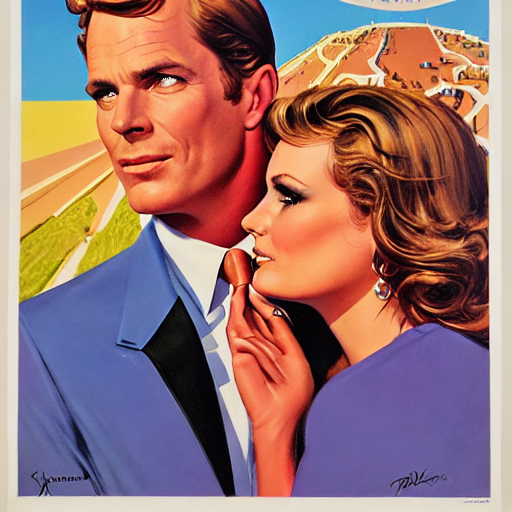

Stylized prompts are more forgiving

An absolute photo likeness is a tall order. Stylized renderings are more forgiving. Here are some really stylized renderings that I like. I freely borrow from other users on Lexica.com and Midjourney galleries to help me create prompts of what may be a cool image.

Funny thing sometimes happens when mentioning an artist

As in the examples above, when you mention a painter in a prompt to indicate style, one usually wants to get that artist’s style. However, the AI sometimes gives you the artist instead.. I thought it would be straightforward to ask for a portrait by Annie Leibowitz and Rembrandt. Not so much.

Context is king

I have had the most success with getting a close likeness when I provide a context of the photo which is similar to the context of the training photos. For example, you saw the example of corporate headshot above. Aside from the iridescent blue eyes that the AI seems to put in every render, these look a lot like me. Here are some other context prompts:

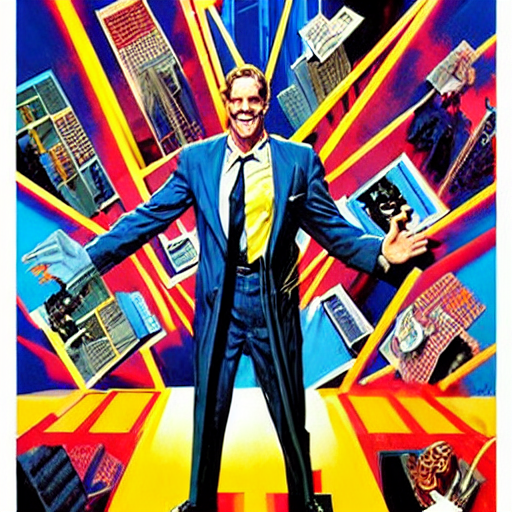

Conceptual prompts

I tried to insert my likeness into other contexts that might be interesting. First was entertainment. I tried several times to have myself be the lead in film or plays. The Ai seems to choke on substituting the face of a recognizable lead actor. The features either gets very crunchy or the AI tries to meld my likeness with the iconic character who played the role.

What I found worked better was to give context cues without the particular film or play mentioned. Instead of the movie, I used the term “key art” which is what the foundation art in movie posters is called I got some interesting results.

My hypothesis: Need more training images

Some of these prompts give me a result close to my likeness, but a lot are misfires.. My theory is that 20 images is just not enough. Also, a more methodical selection of images might give the AI the data it needs to consistently solve for the prompts. For my next experiment I intend to supply 200 images from a variety of angles. I don’t intend to vary the height of the camera much.

In general, people prefer photos where the camera is at eye level. I can raise it up and down a few degrees, but extreme low angle and high angle are unlikely to help generate likable images. I also intend to have all images be contemporary so that I don’t have to specify the age. Stable seems to add 10 years to every age prompt. Other ranges I want to explore are emotions and moods. I am putting together the catalog of training data images and will share in another post. Please let me know your experience if try this yourself.